Model-Based Testing for UFT: 10 Ways to Optimise and Accelerate Your Functional Test Automation (Part 1)

Its ongoing development, including the recently introduced of the ‘

UFT Family’, ensure that Micro Focus UFT remains one of the best-of-breed frameworks for functional Test Automation. It additionally remains first choice for QA teams faced with hard-to-test systems and packaged applications. Meanwhile, increasingly simple test authorship and growing coding supports facilitate faster, more effective automation.

Model-Based Testing is a proven methodology for systematically and automatically identifying test cases associated with complex systems. It facilitates greater and more reliable functional test coverage, while increasing testing agility by automating both test asset creation and maintenance.

That automation includes the maintenance of test scripts and data for UFT, while today

quick and simple to integrate UFT with model-based testing technology. This two-part article will therefore consider 10 reasons why you might consider model-based test design for UFT, setting out ways in which it can enable faster, more rigorous automated testing.Read below to discover how modelling can automatically creates coverage-optimised UFT tests, overcoming system complexity while bringing coders and non-coders into close collaboration. Next week, we’ll see how this same approach automatically allocates data as tests run, as well as how model-based test maintenance keeps up with rapid change and provides a single point of communication for shift left testing.If you would like to see this approach in action, please join us on March 12th 2020 for the next

Vivit Webinar: Model-Based Testing for UFT One and UFT Developer: Optimized Test Script Generation.

10 Ways to Optimise and Accelerate Your Functional Test Automation

1. Automate test script creation

The time, complexity, and skill required to code effective functional tests for complex systems remains of the greatest barriers to adopting test automation in a world of iterative delivery. Half of respondents in the last World Quality Report stated that an inability to achieve appropriate levels of automation is hindering their ability to test in agile contexts, 27% of respondents in a more recent survey cited “script creation/maintenance” as one of their most time-consuming QA activities.

UFT already comes equipped with a range of tools to meet the challenge of creating complex scripting, including IDE templates, growing language support, an object Spy and a re-usable Object Repository.

However complex applications require a vast number of tests in order to achieve sufficient test coverage, hitting the myriad of positive and negative scenarios that exist across their maze of components. With thousands of tests to maintain and execute in short iterations, there is no time to copy and edit boiler plate code, or to record tests for the vast number of logical permeations. Automation engineers instead require a systematic and automated approach, capable of re-using the quickly created UFT objects to create every test in one go.

This is where model-based testing comes in, providing a rapid approach for creating test suites from existing UFT object repositories:

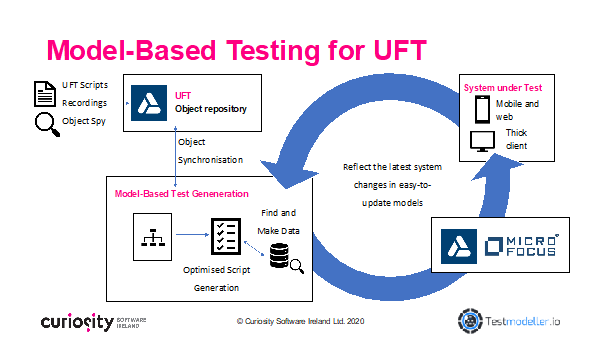

Figure 1: Model-based test generation using UFT object repositories which are themselves quick to build.

It is the process of formally modelling the logic of the system under test that enables this automated test creation, by virtue of the logical precision of the formal models.

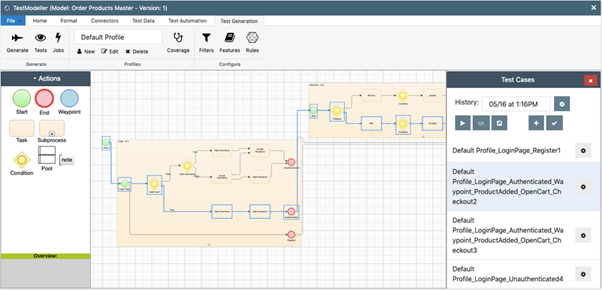

Test Modeller, for instance, builds visual flowchart models that map out the cause and effect logic of the system, constituting a linked-up series of “if this, then this” statements. Each path through the model reflects a different scenario or test case, reaching an endpoint in the model that forms the expected result.

The mathematical precision of these models mean that every test case or “path” can be identified automatically, applying algorithms that work like a car’s GPS finding roots through a city map:

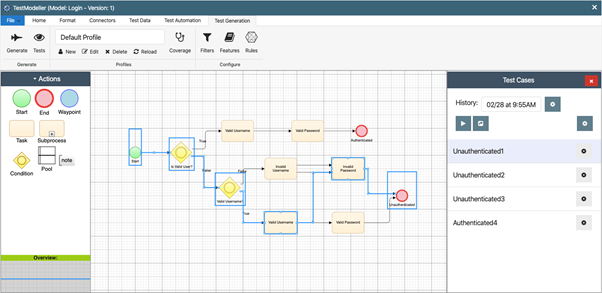

Figure 2 - Automated test case design identifies test cases from visual flowchart models.

This approach removes the time and complexity of repetitious test design: to generate scripts from the model, you simply assign objects in a UFT repository or functions in a library to the blocks in the flowchart.

Alternatively, you can drag and drop UFT modules from the repository to build models from scratch. This leverages a central rule library to model rapidly, applying rules to model equivalence classes automatically as each UFT module is dragged to the canvas. For example, a “First Name” field might have a rule specifying that it should be tested with a valid value, an invalid value, and an empty value:

Figure 3 – Importing an automation page object models pre-defined equivalence classes automatically.

Hitting “generate” compiles the executable test scripts in seconds, using an automated generator and code templates to ensure that scripts match the UFT framework perfectly. The result? A complete test suite that has been created in one click, and all without the need to edit and compile every repetitious permeation needed for rigorous coverage.

2. Ease of test “scripting” – even for non-coders

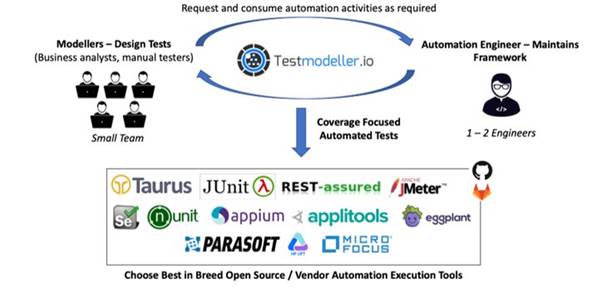

The rapid reusability of existing code in this approach not only supports engineers in creating scripts; it enables those without coding skills to create test suites for even complex systems.

One of the greatest challenges to test automation adoption remains the skills gap it creates. As Angie Jones comments, coded frameworks require people with the mindset of a tester, but the skillset of the developer.

However, dedicated automation engineers are in high demand and short supply, and 46% of respondents to the last World Quality Report cite a lack of “skilled and experienced automation resources” as a barrier to achieving their desired level of automation.

46% of respondents to the last World Quality Report cite a lack of “skilled and experienced automation resources” as a barrier to achieving their desired level of automation.

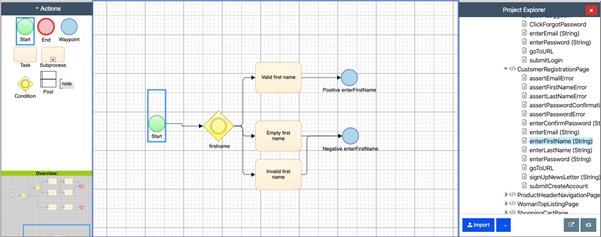

Generating automated tests from re-usable objects and visual models enables enterprise-wide automation adoption, facilitating close collaboration between coders and non-coders. This effectively “deskills” automation for non-coders, allowing anyone to design tests visually for complex systems.

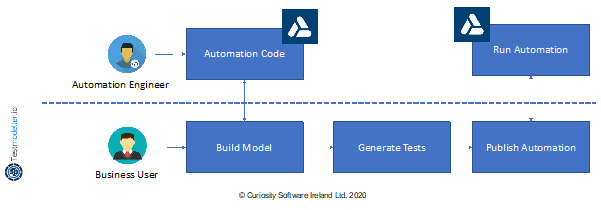

This approach combines the simplicity of low code with the flexibility scripting. An otherwise overworked engineering team can make all their code re-usable, feeding it into a central library for use by the broader team:

Figure 4 – Business Analysts and ‘manual’ testers can re-use UFT code maintained by skilled engineers.

Business analysts and testers with manual backgrounds alike are already often familiar with the BPMN-style modelling used in the above examples, and can therefore build automated tests from models. Meanwhile, the ability to feed custom code retains the versatility needed to test complex and custom application logic:

Figure 5 – The ability to feed custom automation code in enables Business Users to test even complex logic.

Figure 5 – The ability to feed custom automation code in enables Business Users to test even complex logic.

3. Optimal test case coverage

So, model-based testing enables rapid test creation, and also provides an approach for creating complex scripts without needing to edit complex code. The ability to apply systematic, automated algorithms also overcomes one of the greatest barriers to rigorous automated testing: identifying what tests to execute against massively complex systems.

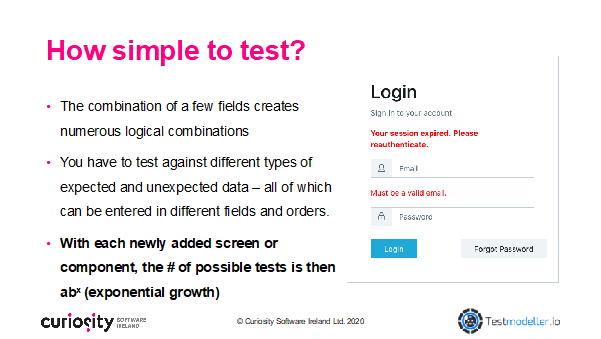

Systems under test today contain more routes through their logic than any human can comprehend. Each route could be a test, and those systems therefore contain more tests than could ever be executed in short iterations:

Figure 6 – Even a simple UI can have numerous fields to test; whole systems are beyond human comprehension.

The sheer number of possible tests that exist across components means that exhaustive test execution is impossible even with automated test execution, and that’s before the time to create and set-up tests and data is considered.

QA teams today therefore require an approach to prioritise tests based on risk, reliably identifying the optimal set of tests they can and should run in an iteration.

Performing this manually is virtually impossible when faced with today’s complex systems and short iterations. There are simply too many possible tests, and 43% of respondents to the last World Quality Report cite difficulty in identifying the right areas on which tests should focus as challenge when applying testing in agile development projects.

43% of respondents to the last World Quality Report cite difficulty in identifying the right areas on which tests should focus as challenge when applying testing to agile development projects.

Manual, unsystematic test design in turn leads to insufficient test coverage and a lack of measurability. It inevitably misses some possible tests, repeatedly creating the most obvious “happy path” scenarios that are found front of mind. These positive tests are then tested repeatedly, leading to resource-intensive over-testing of some functionality, while negative scenarios and unexpected results.

Model-Based Test Generation harnesses the power of computer processing to enable systematic, measurable test design. Today’s systems might be too complex for computer comprehension, but they are no match for mathematical algorithms applied to logical models. These algorithms can identify every path through complex models in minutes, identifying every possible tests contained in the flowchart.

Automated test design is therefore not only faster than creating test scripts manually, but it can also ensures testing rigour. It systematically identifies all possible tests in the modelled logic, while providing a reliable and objective measure with which to say when testing is “complete”.

4. Reliable risk-based testing

In practice, the desired set of generated paths will rarely be “all paths”, as the number of tests needed for exhaustive testing is too large for execution. Testing must instead be optimised based on time and risk. Fortunately, various established optimisation algorithms can reduce the total number of generated tests without compromising the logical test coverage.

This automated technique effectively “de-duplicates” the tests, creating a lean test suite that contains only logically distinct scenarios. Tests might cover every block (node) in the model, or every decision (edge), while “all in/out edges” generates tests that cover every combination of edge in and out of each block.

Each algorithm reduces the total number of tests to an executable quantity. Automated testing in turn avoids wasteful over-testing, while still testing every distinct negative and positive scenario. Tests become manageable, without increasing the negative risk of leaving scenarios untested and letting costly bugs leak into production.

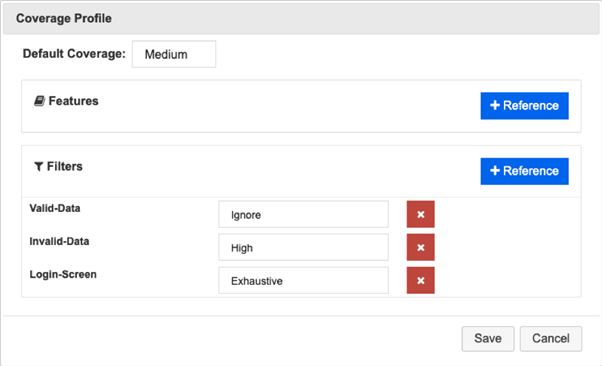

A granular approach to test coverage further enables automation that focuses on specific functionality within the system, rather than optimising based on logic alone. This targeted approach enables truly risk-based regression testing, focusing on newly added, high-risk and high visibility functionality in the smallest possible number of tests.

Test Modeller, for example, deploys the concept of “coverage profiles” to target given features during UFT test design. Blocks in the logical flowchart models are tagged as a “feature”, and a desired coverage profile is set for the tagged feature. All remaining logic is then tested to a desired “default coverage” level:

Figure 7 - A coverage profile created for performing negative testing against a login screen. The generated test cases will target scenarios where invalid data is entered into the screen. “Happy path” scenarios will be ignored, while logic contained in the surrounding model will be tested to a medium level of coverage.

This granular approach to test coverage focuses automation where it is most likely to find the most severe bugs, helping to avoid the time, cost, and frustration they can create. It provides the flexibility to dynamically explode test coverage, focusing automation on given parts of the system. Combined with the improved efficiency of automated UFT test creation, QA can test more in shorter iterations, mitigating against the negative risk of defects as much as possible.

5. Overcome massive system complexity

As emphasised, today’s systems are complex – so complex that they are beyond the reach of human comprehension and the tests that human brain power can generate. This is especially true for end-to-end and integration tests, in which each component traversed in testing brings its own world of logic.

This vast complexity is one factor that makes rigorous end-to-end or integration testing so difficult. There is massive logical complexity when identifying which tests to execute, while the individual tests themselves are massively complex. The test steps and their associated data must link consistently across diverse components, as otherwise automated tests fail due to invalid and misaligned data.

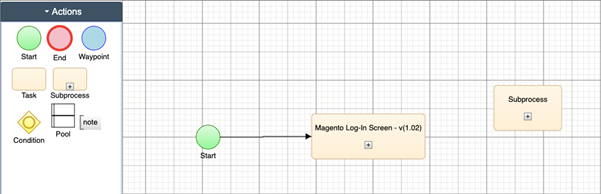

Model-Based Test design breaks this complexity down into discrete and solvable problems, while maximising re-usable to enable rapid test design for complex systems. It thereby helps overcome massive complexity during integrated and end-to-end testing – another reason to consider it for automated testing.

Every modelled component or piece of functionality in Test Modeller becomes an easily re-usable subflow, stored in a central repository. These subprocesses are then rapidly re-usable as building blocks when building master, end-to-end flows:

Figure 8 – Connecting re-usable subprocesses to form a master model for end-to-end test generation.

Each subflow comes equipped with the automation and data needed to execute the logic contained in it. Passing data variables from one subprocess to the next furthermore ensures the consistency of data, for reliable test execution first time. Applying automated coverage algorithms to the master model in turn identifies the smallest number of UFT tests and data required to “cover” the logic contained across the combined subflows:

No system is too complex in this “low code” approach to test design, while end-to-end test design becomes faster with each newly modelled component added to the library. Meanwhile, millions of possible tests are optimised down to thousands or hundreds, enabling rigorous test execution in short sprints.

Fast and simple UFT test creation, not simple tests

Model-Based Test design is capable of building rigorous UFT tests from object repositories and function libraries that are themselves quick to create. This brings automation engineers and non-coders into close collaboration, enabling teams enterprise-wide to build UFT test scripts for complex scripts. Meanwhile, the application of coverage algorithms ensures that the tests deliver true quality at speed, hitting all the ‘need-to-test’ areas.

Tune in next week to discover five further ways in which model-based testing can optimise and accelerate UFT test automation. You will discover how modelling can provide:

- Always-valid test data for UFT tests;

- A central asset to maintain tests at the pace of system change;

- A central point of collaboration for cross-functional teams;

- A reduction in costly design defects being perpetuated in code and tests;

- Maximal re-usability and minimal duplicate effort.

If you would like to see these benefits in action, please join us on March 12th 2020 for the next Vivit Webinar: Model-Based Testing for UFT One and UFT Developer: Optimized Test Script Generation.

Tom Pryce, Communication Manager with Curiosity Software Ireland